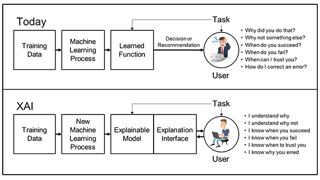

Explainable AI (XAI), or Interpretable AI, or Explainable Machine Learning (XML), is artificial intelligence (AI) in which humans can understand the decisions or predictions made by the AI. It contrasts with the "black box" concept in machine learning where even its designers cannot explain why an AI arrived at a specific decision. By refining the mental models of users of AI-powered systems and dismantling their misconceptions, XAI promises to help users perform more effectively. XAI may be an implementation of the social right to explanation. XAI is relevant even if there is no legal right or regulatory requirement. For example, XAI can improve the user experience of a product or service by helping end users trust that the AI is making good decisions. This way the aim of XAI is to explain what has been done, what is done right now, what will be done next and unveil the information the actions are based on. These characteristics make it possible (i) to confirm existing knowledge (ii) to challenge existing knowledge and (iii) to generate new assumptions. The algorithms used in AI can be differentiated into white-box and black-box machine learning (ML) algorithms. White-box models are ML models that provide results that are understandable for experts in the domain. Black-box models, on the other hand, are extremely hard to explain and can hardly be understood even by domain experts. XAI algorithms are considered to follow the three principles of transparency, interpretability and explainability. Transparency is given “if the processes that extract model parameters from training data and generate labels from testing data can be described and motivated by the approach designer”. Interpretability describes the possibility of comprehending the ML model and presenting the underlying basis for decision-making in a way that is understandable to humans. Explainability is a concept that is recognized as important, but a joint definition is not yet available. It is suggested that explainability in ML can be considered as “the collection of features of the interpretable domain, that have contributed for a given example to produce a decision (e.g., classification or regression)”. If algorithms meet these requirements, they provide a basis for justifying decisions, tracking and thereby verifying them, improving the algorithms, and exploring new facts. Sometimes it is also possible to achieve a result with high accuracy with a white-box ML algorithm that is interpretable in itself. This is especially important in domains like medicine, defense, finance and law, where it is crucial to understand the decisions and build up trust in the algorithms. Many researchers argue that, at least for supervised machine learning, the way forward is symbolic regression, where the algorithm searches the space of mathematical expressions to find the model that best fits a given dataset. AI systems optimize behavior to satisfy a mathematically specified goal system chosen by the system designers, such as the command "maximize accuracy of assessing how positive film reviews are in the test dataset". The AI may learn useful general rules from the test set, such as "reviews containing the word 'horrible' are likely to be negative". However, it may also learn inappropriate rules, such as "reviews containing 'Daniel Day-Lewis' are usually positive"; such rules may be undesirable if they are deemed likely to fail to generalize outside the train set, or if people consider the rule to be "cheating" or "unfair". A human can audit rules in an XAI to get an idea how likely the system is to generalize to future real-world data outside the test-set. From Wikipedia

The AI model quantifies collision risks and clarifies decision-making processes, paving the way for safer and more autonomous ship navigation.