Overview

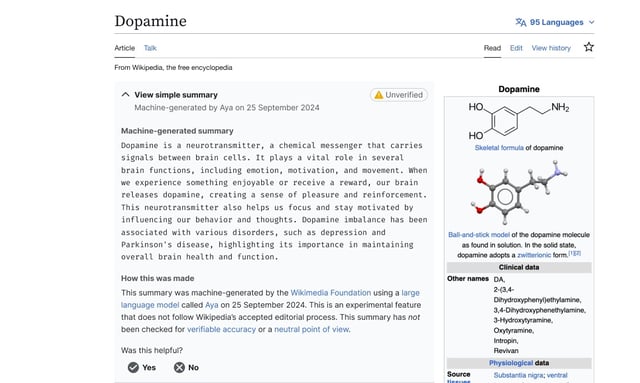

- The Wikimedia Foundation launched an opt-in, two-week trial of AI-generated article summaries on June 2 but paused it on June 11 in response to editor feedback.

- The experiment used Cohere’s open-weight Aya model to create concise summaries labeled with a yellow “unverified” badge at the top of mobile articles.

- Volunteer editors raised concerns that unvetted AI excerpts could introduce inaccuracies, bias and damage Wikipedia’s reputation for reliability.

- Foundation spokespeople admitted the proposal lacked sufficient community consultation and pledged to improve transparency and dialogue before future trials.

- Wikimedia officials affirmed that any forthcoming AI tools will require an editor moderation workflow to keep human oversight central