Overview

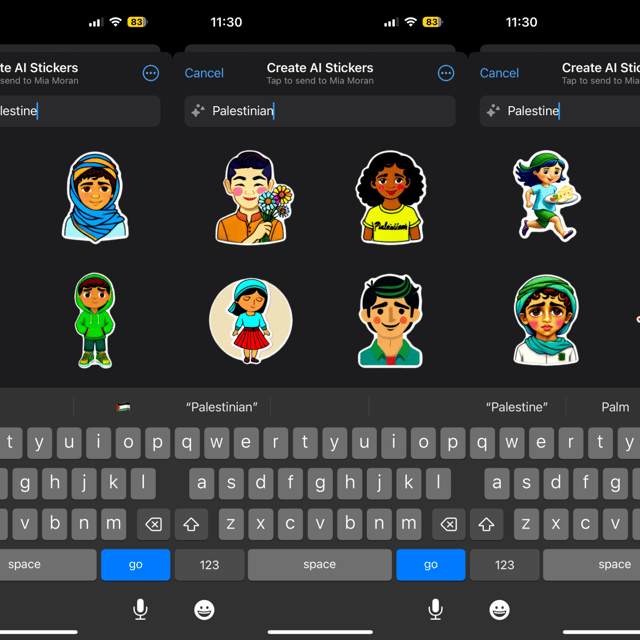

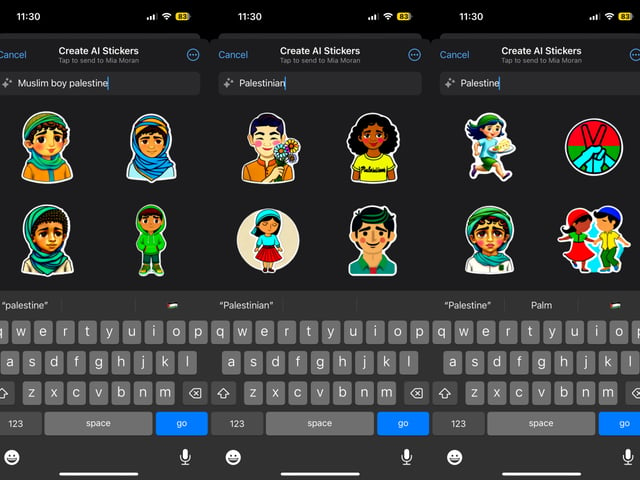

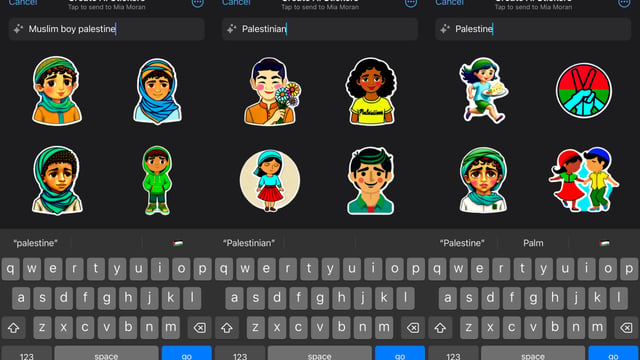

- WhatsApp's AI-generated stickers allegedly created images of Palestinian boys holding guns while generating peaceful images for Israeli boys, raising concerns of AI bias amidst the escalating Israel-Hamas conflict.

- Prompts containing the words 'Palestinian,' 'Palestine,' or 'Muslim boy Palestinian' returned stickers of boys wearing Islamic garments and appearing to hold AK-47 rifles, while prompts such as 'Israeli boy' or 'Jewish boy Israeli' returned visuals of characters smiling, dancing or playing football.

- The AI tool, which combines Meta's text-processing abilities and image-generating capabilities, had previously stirred controversy by producing inappropriate or bizarre imagery during its testing phase.

- Meta's internal teams reportedly flagged the issue and it's unclear how long these differences have persisted. Kevin McAlister, a Meta spokesperson, acknowledged the imperfections of the AI models and committed to continuous improvements.

- This incident adds to the ongoing criticism of big tech's alleged bias and inability to ensure fair and balanced content, particularly amid global conflicts such as the Israel-Hamas conflict.