Overview

- The research, published September 1 in Nature Machine Intelligence, reports a wearable, noninvasive system that augments EEG‑based control with an AI assistant.

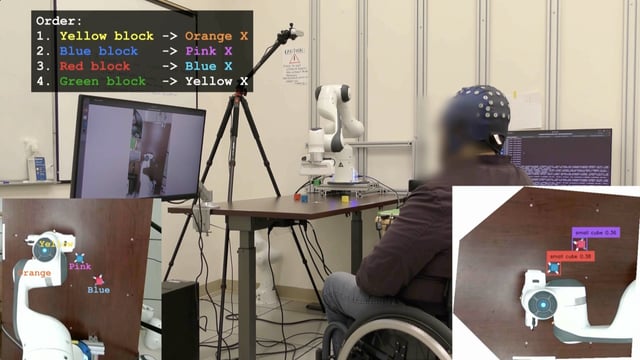

- Decoded brain signals were paired with a computer‑vision model that inferred user intent independently of eye movements to guide a cursor and a robotic arm.

- Across two tasks—moving a cursor to eight targets and using a robotic arm to relocate four blocks—AI assistance shortened completion times for all participants, and the paralyzed participant finished the arm task in about six and a half minutes only with AI.

- The technical approach used a hybrid adaptive decoder combining a convolutional neural network with a ReFIT‑like Kalman filter to translate EEG into movement commands.

- The study was funded by the NIH and the UCLA–Amazon Science Hub, UCLA filed a provisional patent, conflicts of interest were disclosed, and the authors call the results preliminary with plans for larger datasets and more advanced co‑pilots.