Overview

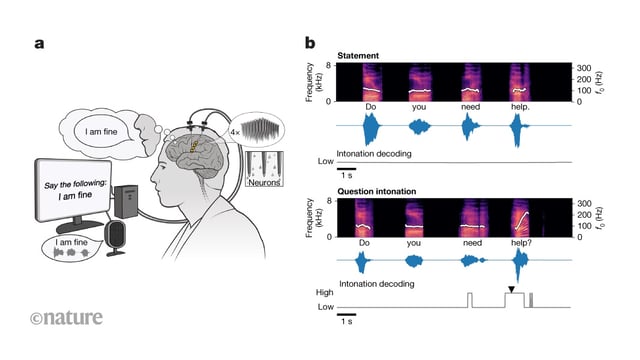

- UC Davis researchers have demonstrated a brain-computer interface that decodes neural activity into expressive speech within ten milliseconds using 256 electrodes in the ventral precentral gyrus.

- In trials with an ALS patient, the system achieved roughly 60 percent word intelligibility in unscripted conversation compared to 4 percent without the interface.

- An AI-powered decoder trained on pre-implant voice recordings enables the prosthesis to replicate a user’s own voice and capture intonation, interjections and simple melodies.

- The approach marks a departure from text-based communication devices by focusing on sound production rather than spelling interfaces.

- The BrainGate2 trial is expanding enrollment as teams refine algorithms, improve signal processing and enhance implant design to boost real-world performance.