Overview

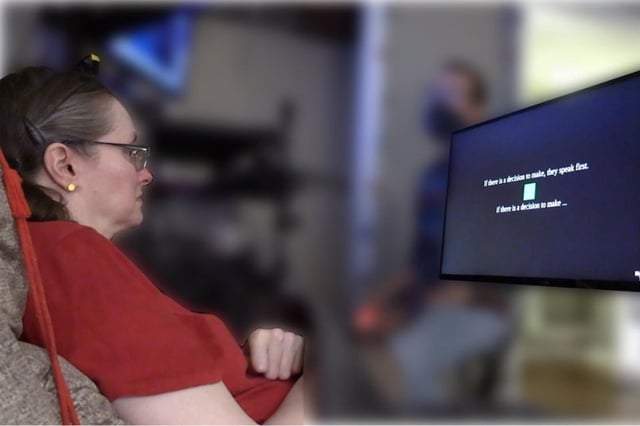

- Neuroscientists implanted microelectrode arrays in the motor cortex of four paralyzed participants to record neural signals for imagined inner speech.

- An AI model trained on these signals decoded cued sentences from a 125,000-word vocabulary with up to 74% accuracy.

- A mental-password mechanism requiring participants to think “chitty chitty bang bang” activated inner-speech decoding with more than 98% reliability to prevent unintended capture.

- Trial performance varied significantly and the device remains unable to decode spontaneous free-form thought without substantial errors.

- Ethical and privacy concerns over unprompted thought detection underscore calls for larger trials, improved sensors, stronger consent protocols and regulatory safeguards before clinical use.