Overview

- Stanford researchers demonstrated proof‑of‑concept decoding of silent speech from motor‑cortex activity in four volunteers with severe paralysis, publishing the results in Cell.

- Signals for inner and attempted speech overlapped, and a previously trained attempted‑speech decoder was unintentionally activated when participants only imagined sentences.

- In cued tests, performance reached up to 86% accuracy on a 50‑word vocabulary and about 74% when expanded to a 125,000‑word lexicon.

- Unstructured inner speech proved difficult to decode, yielding only just‑above‑chance results on a sequence‑memory task and producing unusable outputs for open prompts.

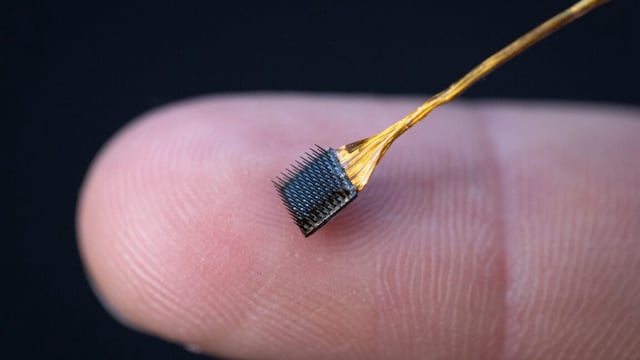

- The team introduced two safeguards—training decoders to ignore labeled inner speech and a user‑imagined activation phrase detected about 98% of the time—while noting the need for larger trials and improved hardware.