Overview

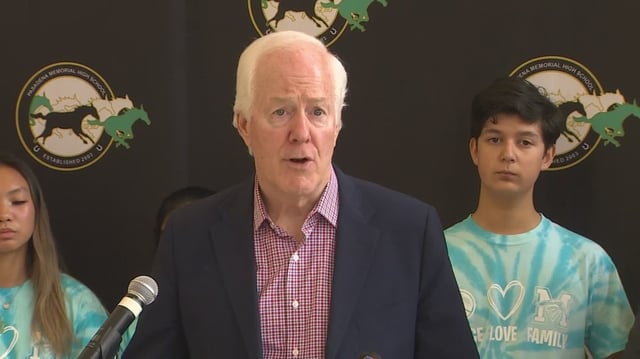

- The PROACTIV AI Data Act was introduced July 22 by Sens. John Cornyn (R-TX) and Andy Kim (D-NJ) to require developers to screen AI training datasets for known child sexual abuse material.

- The bill directs the National Institute of Standards and Technology to establish voluntary best practices for identifying and removing illicit images from AI training data.

- It offers limited liability protection for companies that comply in good faith and tasks the National Science Foundation with funding research into automated CSAM detection methods.

- Lawmakers cited a Stanford study that uncovered over 3,000 suspected CSAM entries in the LAION-5B dataset and National Center for Missing and Exploited Children data showing nearly 500,000 AI-generated CSAM complaints in the first half of 2025.

- PROACTIV joins a broader bipartisan push on Capitol Hill, building on President Trump’s May signing of the Take It Down Act and aligning with revived support for the EARN IT and DEEPFAKES Accountability Acts.