Overview

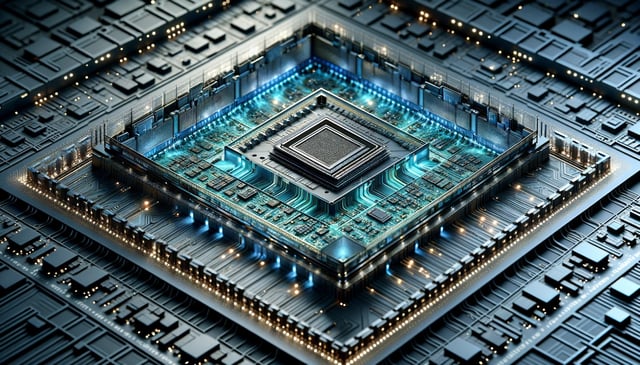

- Samsung announces the development of a 36GB HBM3e memory stack, the highest capacity in the industry, designed to meet the growing demands of AI applications.

- The new memory solution features a 12-Hi stack configuration, offering unprecedented bandwidth of up to 1.28TB/sec, enhancing AI training and inference services.

- Samsung's advanced thermal compression non-conductive film technology enables the industry's smallest gap between memory devices, improving vertical density and reducing chip die warping.

- Micron also enters the HBM3e market with its 24GB 8-Hi memory, set to power NVIDIA's H200 AI GPUs, highlighting the competitive landscape in high-bandwidth memory.

- Samsung and Micron's advancements in HBM3e memory are expected to drive significant improvements in AI performance, reducing total cost of ownership for datacenters.