Overview

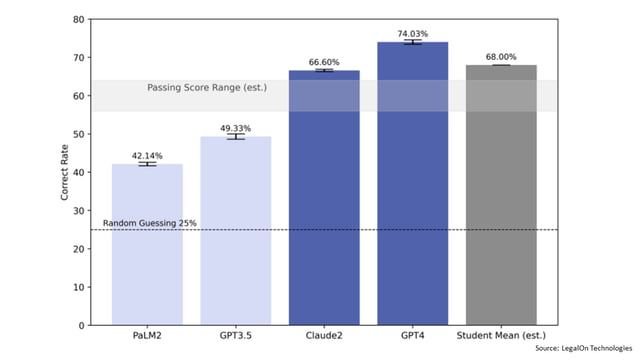

- OpenAI's GPT-4 chatbot scored higher than most people who took a legal ethics exam required for prospective lawyers in nearly every state, according to a new study by LegalOn Technologies.

- The AI software answered 74% of the questions correctly on a simulated Multistate Professional Responsibility Exam (MPRE), beating the estimated 68% average for human test takers nationwide.

- GPT-4 performed particularly well on questions about conflicts of interest and client relationships, but its performance varied by subject area, scoring less on topics such as the safekeeping of funds.

- Nearly every state except Wisconsin requires law students to pass the 60-multiple-choice MPRE before they are granted a license to practice.

- The study indicates that in the future it may be possible to develop AI to assist lawyers with ethical compliance and operate, where relevant, in alignment with lawyers’ professional responsibilities.