Overview

- OpenAI reports it has shut down more than 40 networks abusing its services since 2024, citing chat monitoring with automated systems and human reviewers to flag misuse.

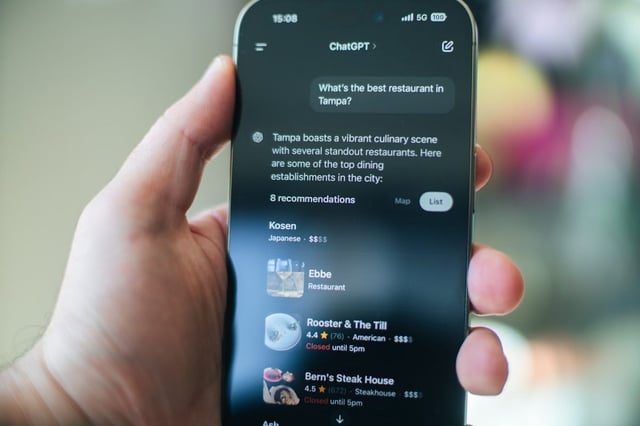

- Threat actors increasingly split tasks across tools, using ChatGPT for reconnaissance and planning while turning to other models for execution and automation.

- The company finds little evidence of models enabling novel attack methods, with AI largely accelerating phishing, malware development, scams, and influence content.

- Accounts linked to Chinese government entities sought help drafting large-scale social media surveillance proposals, including an outline for a Uyghur-related warning model.

- Cybercriminals are scaling operations and evading detection, from scam centers in Cambodia, Myanmar, and Nigeria to requests to strip AI markers, as tools like SpamGPT and MatrixPDF surface.