Overview

- OpenAI published gpt-oss-120b (117 billion parameters) and gpt-oss-20b (21 billion parameters) weights on platforms like Hugging Face under an Apache 2.0 license, permitting free commercial use, modification and redistribution.

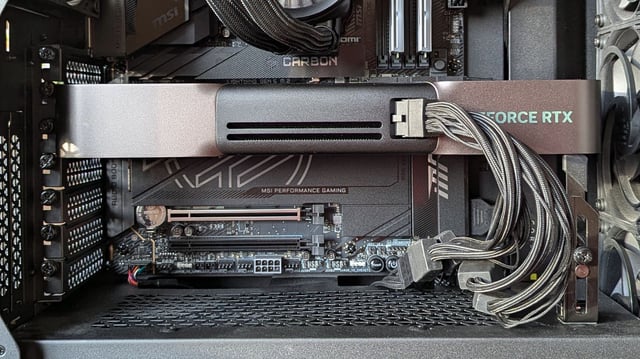

- The 117 billion-parameter gpt-oss-120b runs on a single Nvidia GPU; the 21 billion-parameter gpt-oss-20b requires just 16 GB of memory, enabling deployment on consumer laptops.

- Benchmark tests show gpt-oss-120b performing on par with OpenAI’s o4-mini and both models outpacing DeepSeek’s R1 on Codeforces and academic assessments.

- OpenAI’s safety regime—including adversarial fine-tuning, third-party reviews and high-risk filtering—found no evidence that the open models exceed internal danger thresholds for cyber or biological misuse.

- The move responds to intensifying competition from Chinese AI labs and U.S. policy calls for open AI, marking a strategic pivot from OpenAI’s closed-source API business model.