Overview

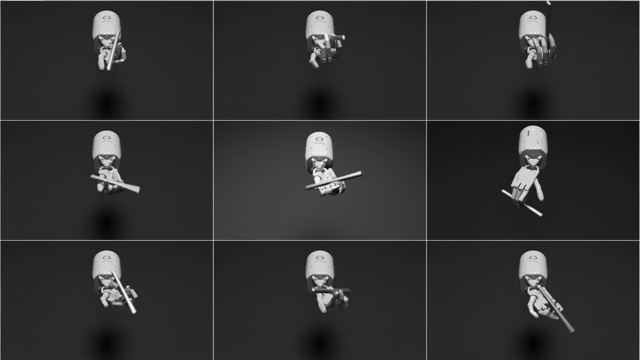

- NVIDIA's Eureka AI agent, powered by OpenAI’s GPT-4, can autonomously write its own reward-based reinforcement learning code, enabling robots' ability to perform complex tasks such as twirling pens, tossing balls, and opening drawers.

- Eureka's self-authored learning programs outperformed human-written codes in 80% of the tasks, improving performance by over 50% in robotic simulations.

- Eureka not only designs the reward programs but also self-assesses its training results, enabling further refinements based on the collected data.

- The AI protocol's distinct approach of integrating generative and reinforcement learning methods marks a key advancement in the field, demonstrating a major step towards resolving the challenges posed by trial-and-error reward design in reinforcement learning.

- To promote further exploration and application, NVIDIA has shared the Eureka library of AI algorithms, enabling researchers to experiment with them using the Nvidia Isaac Gym, the company's physics simulation reference application.