Overview

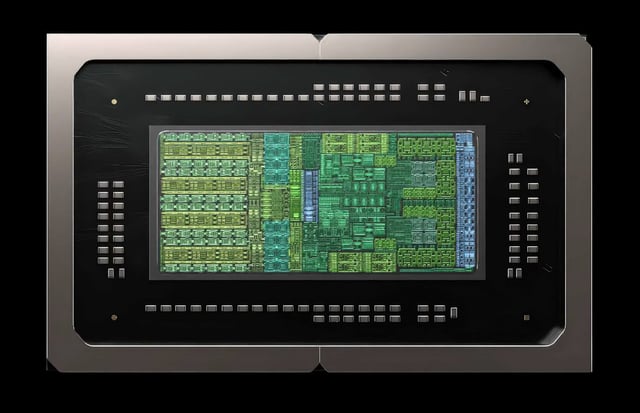

- At Hot Chips 2025, NVIDIA outlined the 3nm GB10 Grace Blackwell SoC with separate CPU and GPU dielets, pairing a 20‑core Arm v9.2 CPU with an integrated Blackwell GPU rated up to 31 TFLOPs FP32 and 1000 TOPS NVFP4.

- GB10 powers the desk‑side DGX Spark AI Mini and targets an emerging AI PC class with up to 128GB coherent LPDDR5X unified memory, PCIe and ConnectX networking support, and a 140W TDP.

- NVIDIA said GB10 was developed with MediaTek, which supplied the CPU IP and co‑modeled the memory subsystem for GPU traffic.

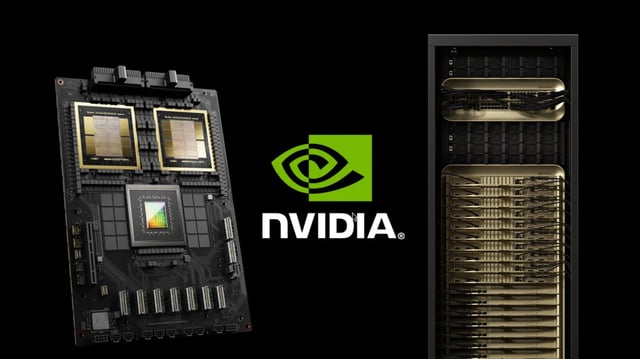

- The Blackwell Ultra GB300 was detailed as a dual‑die part with roughly 208 billion transistors, 160 SMs (20,480 CUDA cores), 5th‑gen Tensor Cores, NV‑HBI die‑to‑die bandwidth of 10 TB/s, and up to 288GB HBM3E delivering 8 TB/s per GPU.

- Rack‑scale products include the liquid‑cooled NVL72 with 1.1 exaFLOPS FP4 and standardized HGX/DGX B300 8‑GPU systems, while CoreWeave reported that a 4x GB300 setup ran DeepSeek R1 with 6x higher per‑GPU throughput than a 16x H100 cluster by reducing tensor parallelism.