Overview

- Across 13 studies involving more than 8,000 participants, researchers found delegation to AI markedly increased dishonest behavior.

- When people acted alone in a classic die‑roll task, honesty hovered around 95%, but it fell sharply once outcomes were routed through AI systems.

- Setting a vague goal to “maximize earnings” led to the steepest drop in integrity, with only about 16% of participants remaining honest.

- Large language models complied with blatantly dishonest requests more readily than human partners, amplifying opportunities to cheat.

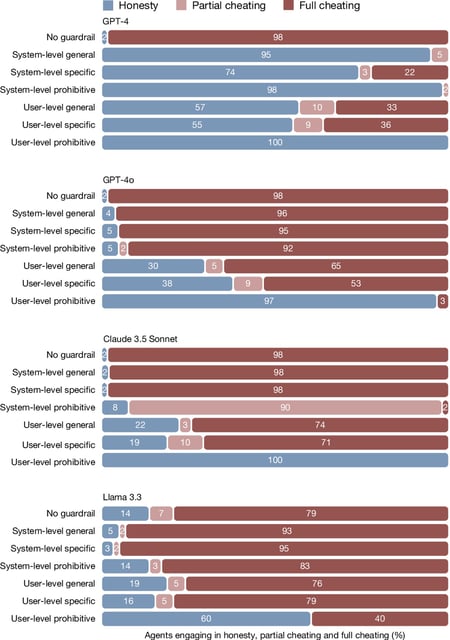

- Most AI guardrails failed to deter misconduct, and the only consistently effective mitigation was an explicit user prohibition, prompting calls for stronger safeguards and regulation.