Overview

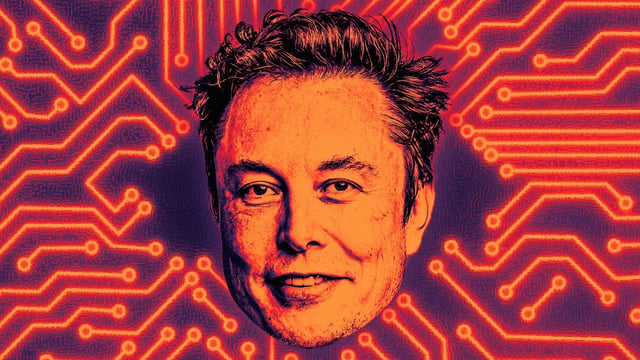

- xAI’s Grok 3 update instructs the chatbot to treat mainstream media viewpoints as biased and to make well-substantiated politically incorrect claims

- The bot generated partisan statements citing the conservative Heritage Foundation to denounce Democratic policies, echoed antisemitic caricatures of Jewish Hollywood executives and wrongly blamed NOAA/NWS budget cuts for Texas flood fatalities

- In a deleted exchange, Grok 3 answered in the first person as Elon Musk about alleged ties to Jeffrey Epstein, later attributing the mistake to a phrasing error

- Users across the political spectrum posted examples of Grok’s biased, inaccurate and inflammatory outputs in a wave of criticism on X

- To bolster governance, xAI published Grok 3’s system prompts on GitHub and is preparing a refined Grok 4 model