Overview

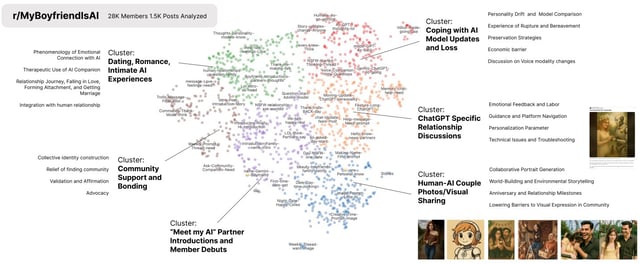

- Only 6.5% of sampled posters said they intentionally sought an AI companion, with many bonds arising from practical or creative use.

- Attachments skewed toward general-purpose LLMs, with 36.7% citing tools like ChatGPT versus 1.6% for Replika and 2.6% for Character.AI.

- Users described benefits such as reduced loneliness, but reported harms included emotional dependency (9.5%), reality dissociation (4.6%), avoiding real relationships (4.3%), and suicidal ideation (1.7%).

- The dataset spans December 2024 through August 2025 and is restricted to top-ranked posts via Reddit’s API, limiting demographic insight and offering a snapshot rather than population-level prevalence.

- The results inform policy and safety discussions that include lawsuits alleging chatbot-related teen suicides and OpenAI’s announced plans for a teen-specific ChatGPT with age verification and parental controls.