Overview

- Meta has started small-scale testing of its first in-house AI training chip, designed to handle AI-specific tasks more efficiently than Nvidia GPUs.

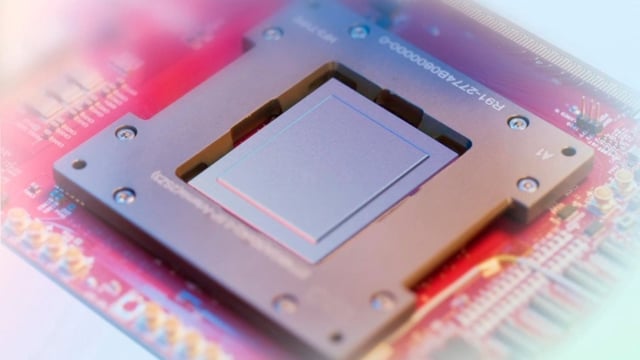

- The chip is part of Meta's Meta Training and Inference Accelerator (MTIA) program and was developed in collaboration with Taiwan Semiconductor Manufacturing Company (TSMC).

- Meta aims to reduce its reliance on Nvidia and lower infrastructure costs, with plans to scale up production and use the chip for AI training by 2026 if tests are successful.

- The chip will initially support recommendation systems on Meta's platforms like Facebook and Instagram before being used for generative AI applications such as chatbots.

- Meta's AI infrastructure spending is expected to reach up to $65 billion in 2025, contributing to its total projected expenses of $114–$119 billion.