Overview

- Meta has initiated a limited deployment of its first in-house AI training chip, part of its Meta Training and Inference Accelerator (MTIA) program.

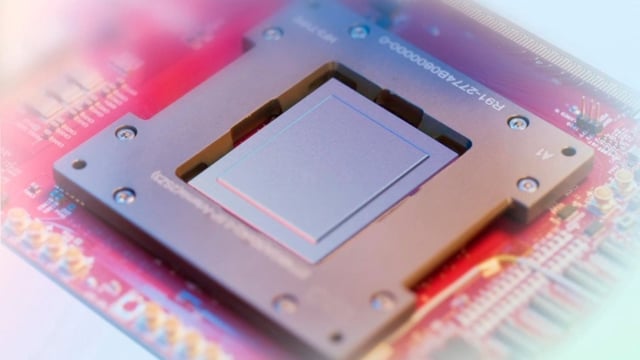

- The chip, developed in collaboration with Taiwan Semiconductor Manufacturing Co. (TSMC), is tailored for AI-specific tasks to improve power efficiency and reduce reliance on Nvidia GPUs.

- If testing proves successful, Meta plans to scale up production and integrate the chip into recommendation systems and generative AI products like chatbots by 2026.

- Meta's AI infrastructure spending is projected to reach up to $65 billion in 2025, driving broader efforts to lower long-term operational costs.

- The chip development follows earlier challenges, including the discontinuation of a previous in-house chip, as Meta remains one of Nvidia's largest customers.