Overview

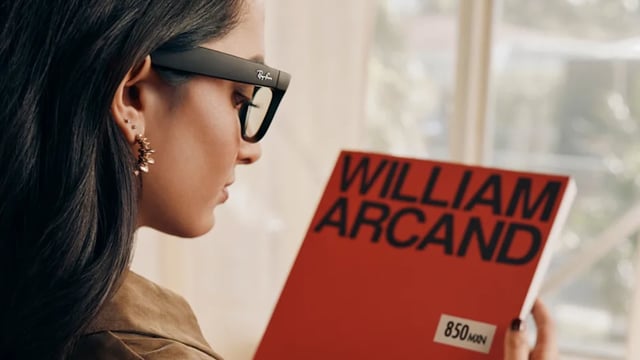

- Meta's customizable 'Detailed Responses' feature for Ray-Ban Meta smart glasses starts rolling out in the U.S. and Canada, providing enhanced descriptions of surroundings for blind and low vision users.

- The 'Call a Volunteer' service, developed with Be My Eyes, will launch in 18 countries this month, connecting users to sighted volunteers for real-time assistance via the glasses' camera.

- Meta is advancing sEMG wristband technology to enable human-computer interaction for users with physical impairments, with recent research focusing on hand tremors and paralysis.

- Live captions and speech-to-text tools are now available across Meta’s extended reality platforms, improving accessibility in virtual environments like Quest headsets and Horizon Worlds.

- An ASL-to-English translation chatbot, powered by Meta's Llama AI models, is facilitating communication between Deaf and hearing users on WhatsApp.