Overview

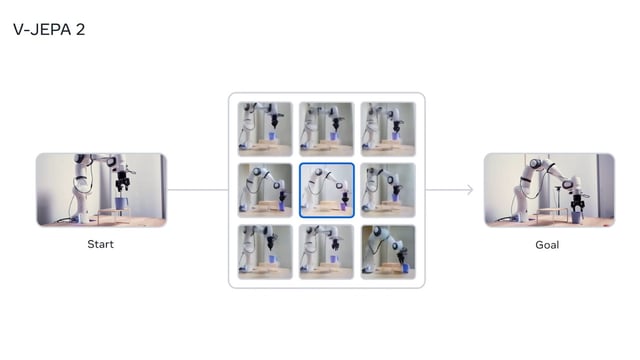

- Meta released V-JEPA2 on June 11, 2025, an AI world model trained solely on raw video to learn physical interactions without labeled data.

- The model enhances action prediction capabilities and runs up to 30× faster than Nvidia’s Cosmos model in benchmark evaluations.

- Lab-based robots powered by V-JEPA2 have performed tasks such as picking up unfamiliar objects and placing them in new locations.

- Meta published three open benchmarks to help researchers assess AI agents’ ability to reason and learn from video sequences.

- The launch underscores Meta’s broader push into advanced machine intelligence and follows parallel efforts by DeepMind and World Labs.