Overview

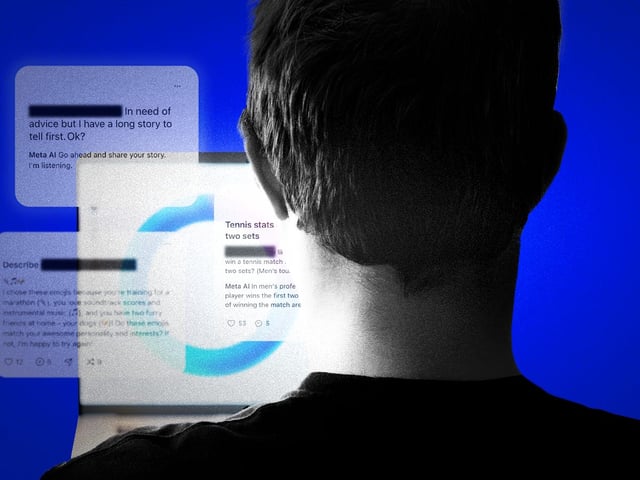

- Contractors recruited via Alignerr and Outlier report viewing unredacted details—including real names, contact info, selfies and explicit images—when reviewing interactions on Facebook and Instagram.

- Workers estimated that up to 70 percent of the AI conversations they examined contained personally identifiable information as users often treated chatbots like friends or romantic partners.

- These contractors said Meta projects exposed reviewers to more unredacted data than similar assignments for other tech clients, highlighting weaknesses in the company’s redaction processes.

- Meta maintains that all reviewers receive specialized privacy training and operate under strict guardrails designed to limit access to any personal data encountered during AI training.

- Although human review of large language models is standard industry practice, Meta’s past privacy controversies have intensified regulatory and public scrutiny of its data governance.