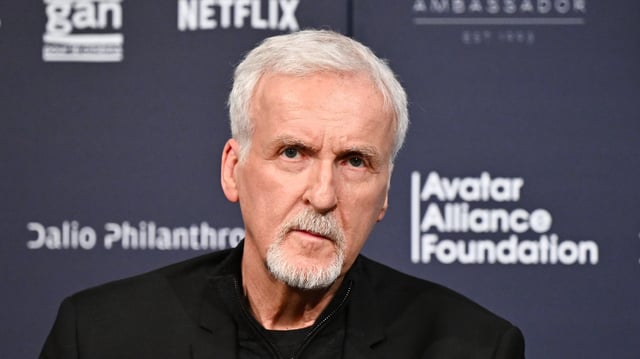

Overview

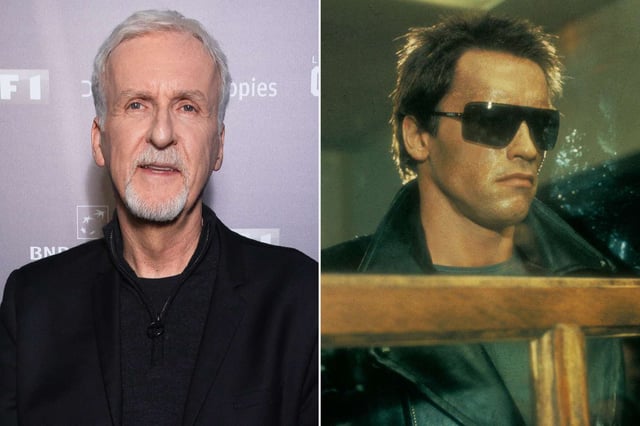

- Cameron cautioned that AI-controlled weapons systems risk triggering a Terminator-style apocalypse and unintended nuclear exchanges.

- He warned that the pace of modern military decision-making may surpass human judgment, potentially requiring superintelligence despite the danger of critical errors.

- The filmmaker identified climate change, nuclear arsenals, and super-intelligent AI as three existential threats peaking simultaneously.

- As a Stability AI board member, Cameron advocates using generative tools to cut blockbuster VFX budgets in half without laying off artists.

- He maintains that AI cannot replace human screenwriters, arguing it rehashes existing ideas without delivering true emotional depth.