Overview

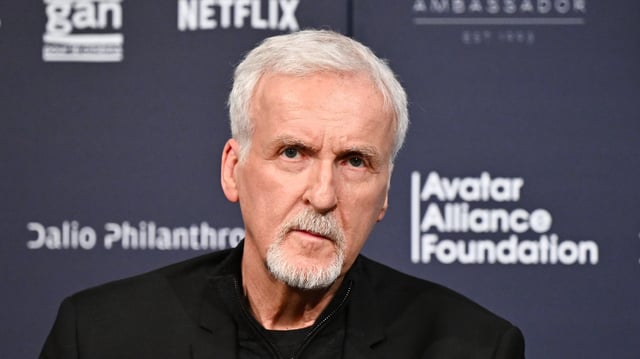

- In a Rolling Stone interview, Cameron said pairing AI with weapons systems, including nuclear defense networks, risks creating scenarios reminiscent of his Terminator films.

- He warned that the rapid decision loops of AI-driven battlefields exceed human reaction times and human error could trigger accidental nuclear incidents.

- Cameron identified climate change, nuclear arsenals and super-intelligent AI as three concurrent existential threats at a critical juncture in human development.

- Despite his concerns, he joined Stability AI’s board in September 2024 to champion generative tools aimed at halving blockbuster visual effects costs without cutting jobs.

- He remains skeptical that AI can replicate human lived experience in storytelling, arguing that genuine emotional nuance requires human screenwriters.