Overview

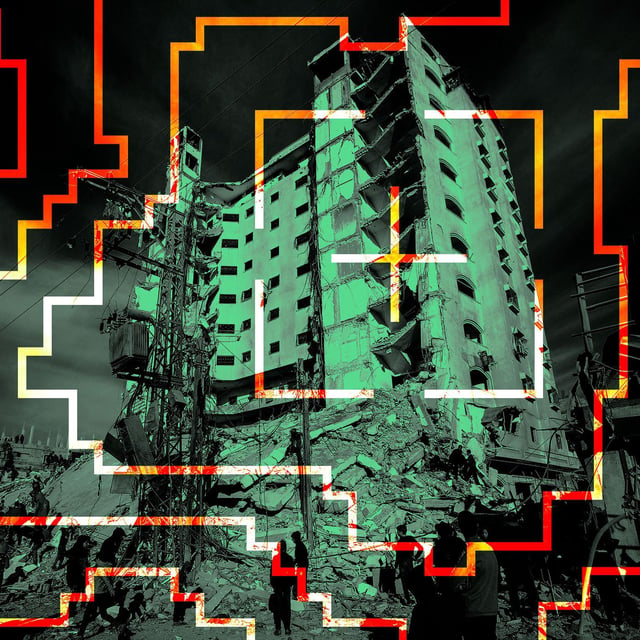

- Israel's military reportedly employs an AI system named Lavender to identify targets in Gaza, sparking significant ethical and legal concerns.

- Lavender, developed post-Hamas' October 7 attacks, has allegedly marked 37,000 Palestinians as potential assassination targets, with a reported 10% error rate.

- The system's use has led to thousands of civilian casualties, raising questions about the reliance on AI for life-and-death decisions with minimal human oversight.

- Israeli intelligence sources claim that human analysts serve merely as a 'rubber stamp' for Lavender's decisions, often spending only seconds to verify targets.

- International scrutiny intensifies over Israel's military tactics, including a call from President Biden for Israel to better preserve civilian life and enable aid flow.