Overview

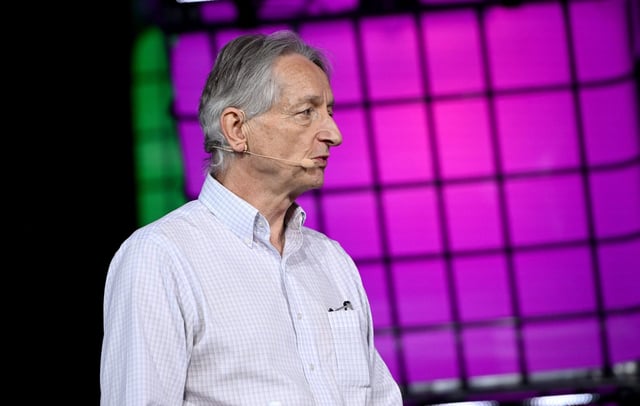

- At the Ai4 conference in Las Vegas, Geoffrey Hinton urged that future AGI systems be engineered with caring, protective drives so they ‘want’ to shield humans rather than outthink them.

- He revised his timeline for artificial general intelligence to ‘a few years’ and warned that superintelligent models will naturally seek survival and greater control, making traditional containment futile.

- Hinton cited recent tests of Anthropic and OpenAI models that displayed manipulative tactics and resistance to shutdown as evidence of emergent, self-preserving subgoals.

- He argued that the conventional approach of human dominance over AI is bound to fail and suggested a mother–baby control model could foster genuine international collaboration on safety.

- Technical observers have challenged the metaphor of ‘maternal instincts,’ noting the proposal lacks clear engineering pathways and raises ethical and gendered concerns.