Overview

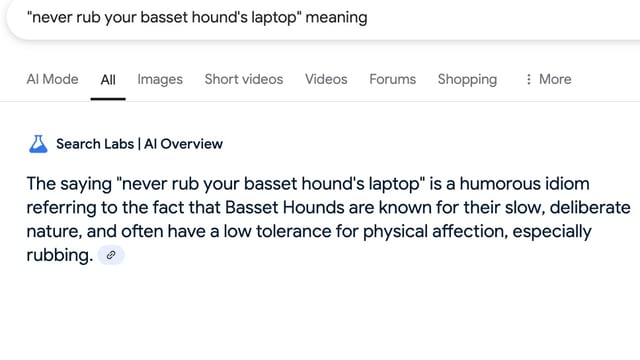

- Google's AI Overviews feature has been shown to confidently explain entirely fictional idioms, such as 'You can't lick a badger twice,' as if they were real.

- The AI fabricates plausible-sounding meanings for nonsensical phrases, reflecting a broader issue of 'hallucinations' in generative AI systems like Gemini.

- Google has acknowledged the problem, describing some outputs as 'odd, inaccurate or unhelpful,' and has implemented measures to block responses to nonsensical queries.

- Despite these issues, Google plans to expand AI Overviews to handle complex queries, including coding, advanced math, and even medical advice, sparking concerns about reliability.

- Critics warn that such inaccuracies undermine trust in search engines and make fact-checking and source verification significantly more difficult for users.