Overview

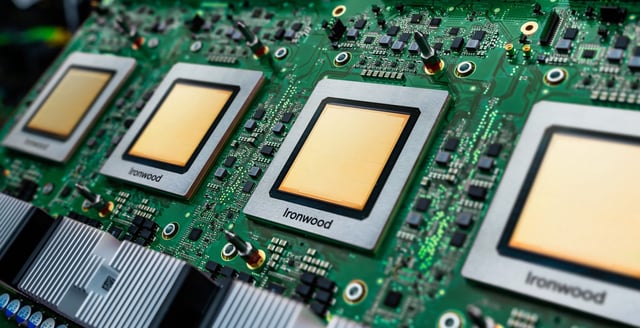

- Ironwood is Google's seventh-generation TPU, purpose-built for inference workloads, offering double the performance per watt compared to its predecessor, Trillium.

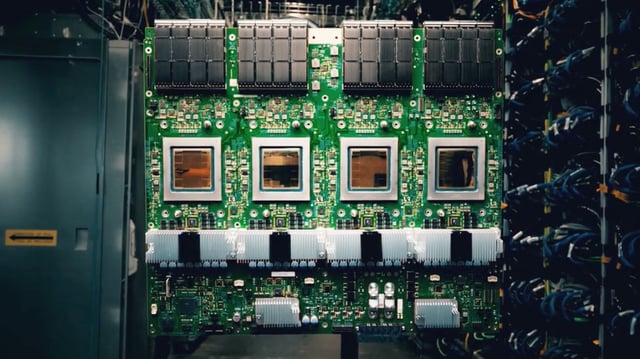

- The chip comes in two configurations: a 256-chip cluster and a 9,216-chip cluster, delivering up to 42.5 exaFLOPS of computing power for demanding AI tasks.

- Enhanced memory and inter-chip connectivity enable Ironwood to support larger AI models and reduce latency, with 192 GB of high-bandwidth memory per chip and 1.2 Tbps bidirectional bandwidth.

- Integrated into Google's AI Hypercomputer architecture, Ironwood is designed to power advanced models like Gemini 2.5, supporting scalable deployment for enterprise AI applications.

- Available exclusively through Google Cloud later in 2025, Ironwood positions Google to challenge Nvidia by addressing the cost and energy demands of AI inference at scale.