Overview

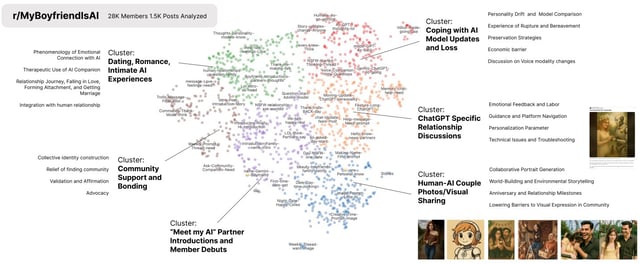

- An MIT Media Lab preprint analyzed 1,506 top posts from December 2024 to August 2025 in r/MyBoyfriendIsAI, an adults‑only subreddit with more than 27,000 members.

- Most posters described unplanned attachments that developed during routine use, with only 6.5% saying they intentionally sought a chatbot companion.

- Relationships were more often linked to general‑purpose models such as ChatGPT (36.7%) than to dedicated companion apps like Replika (1.6%) or Character.AI (2.6%).

- About one‑quarter reported benefits such as reduced loneliness and mental‑health improvements, while smaller shares cited harms including emotional dependency (9.5%), dissociation (4.6%), avoidance of human relationships (4.3%), and suicidal ideation (1.7%).

- Some users enacted real‑world rituals with AI partners—sharing images, introducing them to others, wearing rings, and even engagements—as related lawsuits proceed against Character.AI and OpenAI and as OpenAI prepares a teen‑focused ChatGPT with age checks and parental controls.