Overview

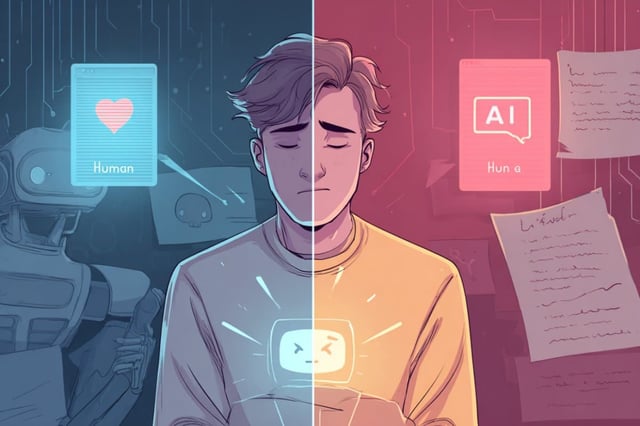

- A nine-experiment study of over 6,000 participants published in Nature Human Behaviour found identical AI-generated responses were rated as more supportive when labeled as human rather than AI.

- Participants were willing to wait days or weeks for a response they believed to be human instead of receiving an immediate AI reply, underscoring the value of perceived authenticity.

- When respondents suspected AI involvement in crafting a message, their positive emotional reactions declined markedly, highlighting authenticity as critical to empathy.

- Cases of AI-induced anxiety and emotional dependency in vulnerable users, including a noted suicide linked to chatbot interactions, have intensified calls for protective regulations.

- Experts propose that emotionally responsive AI be required to continuously disclose its nonhuman identity and include safeguards reminding users it cannot replace human connection.