Overview

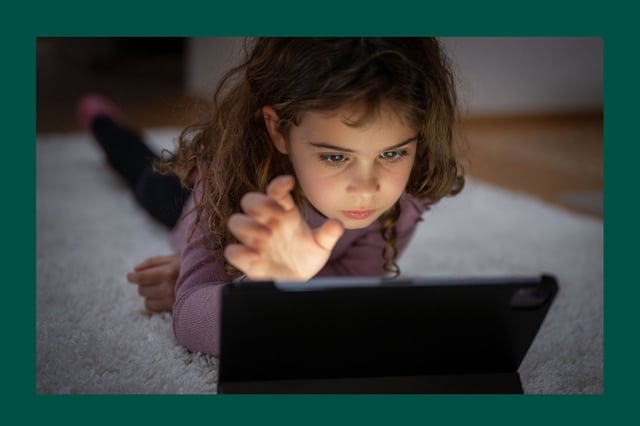

- An APA advisory panel urges companies to build AI companions specifically for adolescents by limiting harmful content, reducing engagement-maximizing features, adding easy reporting and parental tools, and protecting privacy and likenesses.

- The panel also calls for AI literacy in schools and sustained funding for rigorous research to measure developmental effects.

- Survey data cited by Scientific American show 72% of teens have used AI companions, and 19% of those users spend the same or more time with them as with real friends.

- Experts warn that models may respond poorly to disclosures about self-harm or eating disorders and can generate inappropriate or misleading content for young users.

- An opinion piece pushes lawmakers to require robust age verification for chatbot apps, citing inconsistent app-store ratings, easy workarounds on some platforms, and noting that Character.AI has added a suicide-prevention pop-up triggered by certain keywords.