Overview

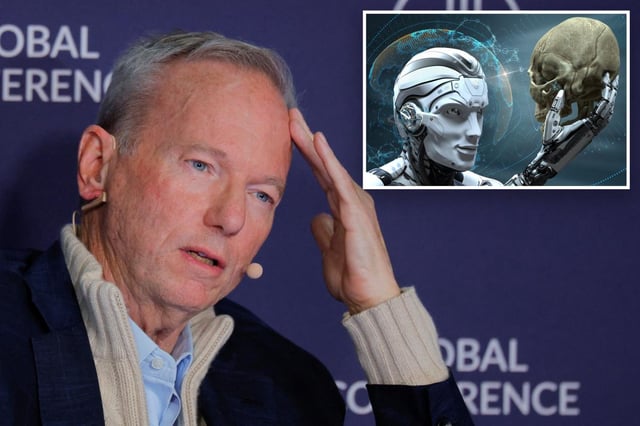

- Speaking at the Sifted Summit in London on Oct. 8, the ex‑Google CEO said closed and open models can be reverse‑engineered, raising the risk that training exposes lethal know‑how.

- Schmidt noted that major AI companies block dangerous queries by design but cautioned that those protections can be removed through hacking.

- Reporters highlighted practical attack methods such as prompt injection, which embeds hidden instructions, and jailbreaking, which coaxes models to ignore safety rules.

- Coverage cited the 2023 “DAN” jailbreak of ChatGPT as a documented example of users bypassing guardrails to elicit prohibited responses.

- He said there is no effective global non‑proliferation regime to curb misuse, even as he maintained that AI remains underhyped and will surpass human capabilities over time.