Overview

- DeepSeek quietly released R1-0528 on Hugging Face as a minor trial upgrade to its January reasoning model.

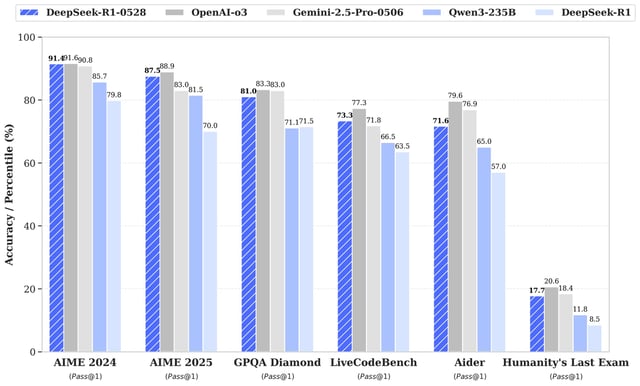

- Benchmarks rank R1-0528 just behind OpenAI’s o4-mini and o3 models on code generation, outperforming competitors like Alibaba’s Qwen3 and xAI’s Grok 3 mini.

- In AIME2025 testing, the updated model’s accuracy climbed from 70% to 87.5% by extending chain-of-thought token usage from 12,000 to 23,000 per question.

- Both the full-size R1-0528 and a distilled 8-billion-parameter variant run on a single GPU and are available under a permissive MIT license for commercial customization.

- Independent tests find R1-0528 applies tighter censorship filters on sensitive topics, and U.S. agencies have barred its use on government devices over security concerns.