Overview

- Google DeepMind’s Gemini Deep Think model scored 35 out of 42 points under authentic IMO exam conditions, earning a gold medal as confirmed by IMO president Gregor Dolinar.

- OpenAI’s experimental model matched the gold cutoff with a self-reported 35-point result that three former IMO medalists verified, but it remains pending formal confirmation by competition organizers.

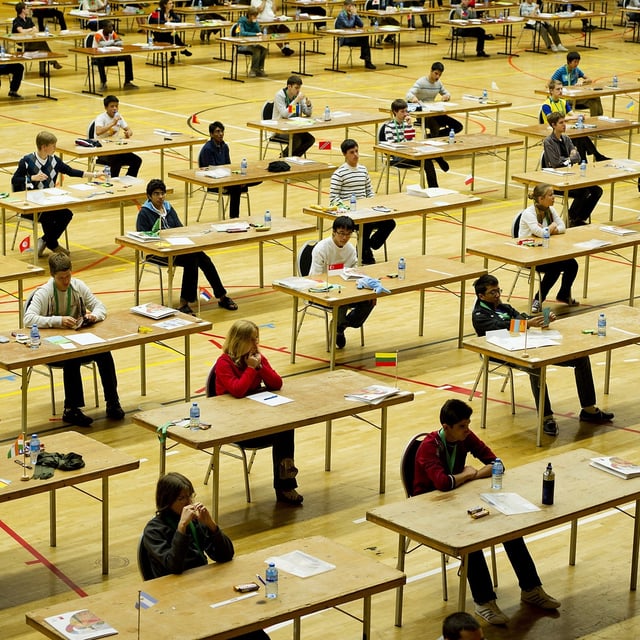

- Both AI systems solved five of six problems without internet access or external tools, completing two 4.5-hour sessions and writing full natural-language proofs.

- OpenAI’s early announcement of its score drew criticism from DeepMind CEO Demis Hassabis and fueled calls for standardized announcement procedures for AI contest entries.

- Experts including NYU’s Gary Marcus and Fields Medalist Terence Tao have raised concerns about the fairness of lab-based testing and the comparability of AI performance to human contestants under strict exam rules.