Overview

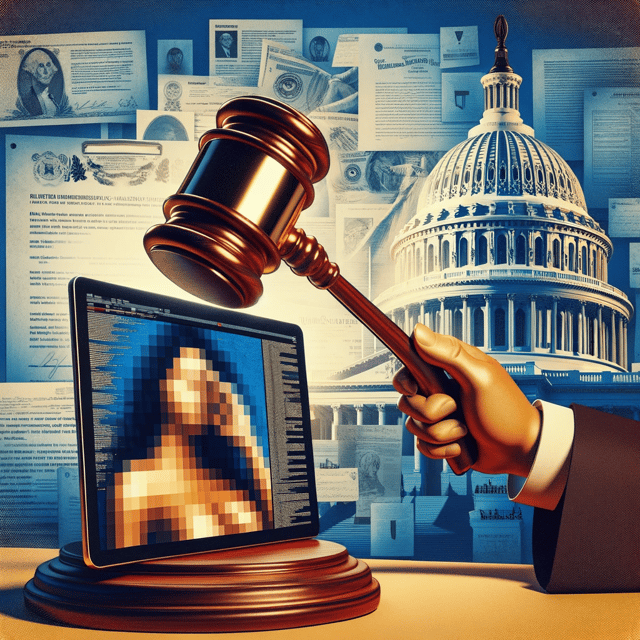

- Sexually explicit deepfake images of Taylor Swift have gone viral, sparking widespread condemnation and highlighting the increasing issue of AI-generated non-consensual intimate imagery.

- Adobe is developing a watermarking tool for AI-generated photos, called Content Credentials, which could help track down creators of abusive images, but critics argue it is not a comprehensive solution.

- Law enforcement agencies are struggling with the surge of AI-generated fake child sex images, which are complicating investigations into real crimes against children.

- State lawmakers across the U.S. are seeking ways to combat nonconsensual deepfake images, with at least 10 states having enacted related laws and many more considering measures.

- Experts predict that cases involving AI-generated child sex abuse materials will grow exponentially, raising questions about the adequacy of existing federal and state laws to prosecute these crimes.