Overview

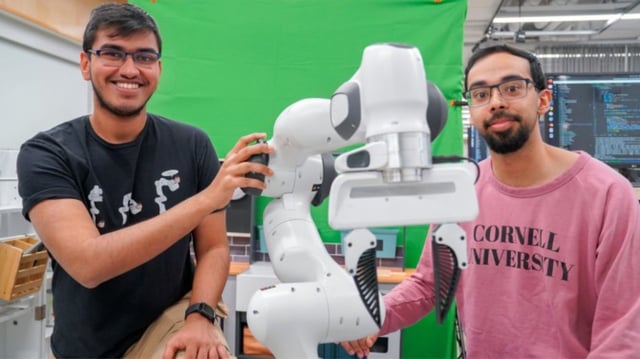

- RHyME, short for Retrieval for Hybrid Imitation under Mismatched Execution, allows robots to learn tasks by watching a single how-to video.

- The framework requires just 30 minutes of robot training data and achieves over 50% higher task success rates compared to earlier methods.

- RHyME uses a memory bank of previously seen videos to adapt to new tasks, bridging differences between human and robot motions.

- Unlike traditional systems, RHyME handles mismatches in human-robot execution, enabling flexible and robust learning.

- The research, supported by Google, OpenAI, and U.S. government agencies, will be presented at the IEEE Robotics Conference in May 2025.