Overview

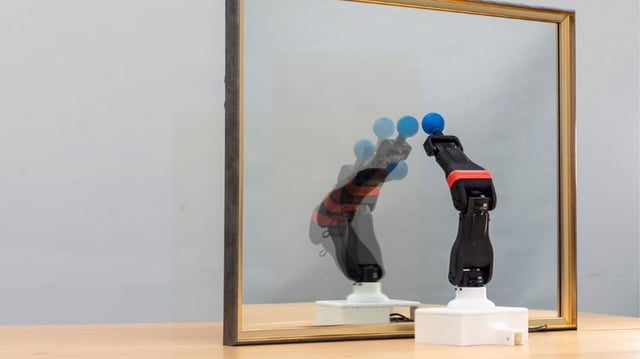

- Researchers at Columbia University have created a robot arm that learns and adapts by observing its own movements through a single camera, mimicking human self-awareness.

- The robot uses a self-supervised learning framework with three deep neural networks to build a kinematic model of itself and plan its actions based on video data.

- This method allows robots to adjust to physical damage, demonstrated when the robot adapted to a bent limb by analyzing its altered movement and compensating accordingly.

- The technology reduces the need for extensive virtual simulations and human programming, enabling robots to operate more autonomously in real-world environments.

- Potential applications include manufacturing, home assistance, and elder care, where self-repairing robots could minimize downtime and enhance reliability.