Overview

- OmniHuman, ByteDance's new AI model, can create realistic full-body videos of people talking, singing, and moving using just a single photo and audio input.

- The system outperforms previous methods by generating natural gestures, synchronized speech, and detailed body movements, showcasing its versatility across various scenarios.

- ByteDance trained OmniHuman on over 18,700 hours of video data using a multimodal approach combining text, audio, and body poses to enhance realism and reduce data wastage.

- While the technology demonstrates potential for entertainment, education, and digital avatars, experts warn about risks such as deepfake misuse in misinformation and fraud.

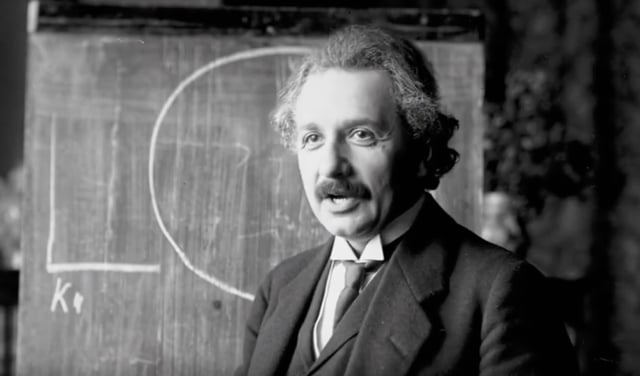

- OmniHuman is currently a research project with no public release date, but its viral demos, including a lifelike recreation of Albert Einstein, highlight its groundbreaking capabilities.