Overview

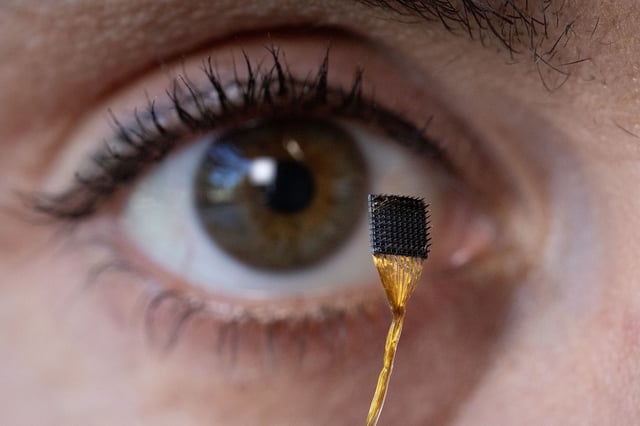

- Four volunteers with paralysis from ALS or brainstem stroke had motor-cortex microelectrode arrays that enabled prompted inner speech to be decoded with accuracy reported up to 74 percent.

- The system tracked neural activity tied to phonemes and used AI to assemble words and sentences from vocabularies as large as 125,000 words.

- Inner and attempted speech produced overlapping but separable neural patterns, with inner speech signals generally weaker than those from attempted speech.

- To protect mental privacy, decoding activated only after a chosen passphrase thought silently—such as “chitty chitty bang bang”—which the system detected with more than 98 percent accuracy.

- Performance remained imperfect in early tests, including 14 to 33 percent errors on a 50-word set and occasional capture of unintended inner speech like silently counted numbers, underscoring that this is a proof-of-principle result needing further validation.