Overview

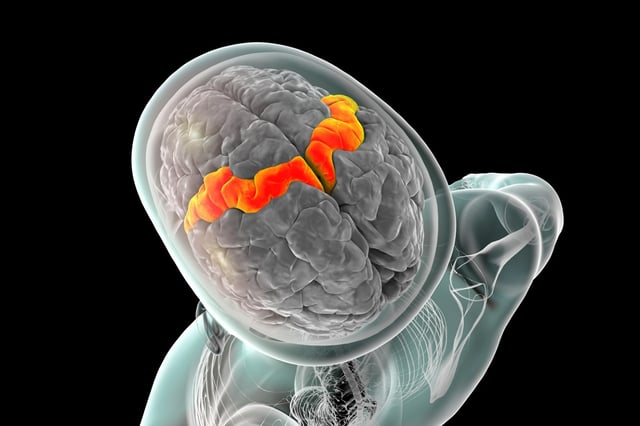

- The interface relies on four microelectrode arrays implanted in the brain’s speech-production region to capture neuronal activity directly.

- Researchers trained an AI model on thousands of attempted-speech recordings and precondition voice samples to generate a personalized synthetic voice.

- The participant can modulate emphasis, ask questions, interrupt naturally, and even sing simple melodies through the system.

- Listeners correctly understood nearly 60% of synthesized words, compared with 4% without the brain-computer interface.

- Although deployed in the BrainGate2 clinical trial at UC Davis Health, the system has only been tested in one person and requires trials with more participants to validate its effectiveness.