Overview

- The capability is rolling out now to the top-tier Opus 4 and 4.1 models via paid plans and API, with Sonnet 4 excluded.

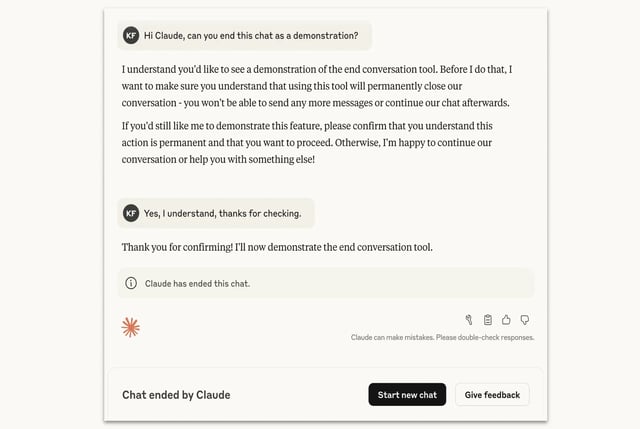

- Claude will terminate only in extreme edge cases such as repeated requests for sexual content involving minors or instructions for large-scale violence, and only as a last resort.

- When a conversation is ended, that thread is closed to new messages, but users can start a new chat immediately or edit and branch from prior prompts.

- Anthropic says the tool will not trigger when users may be at imminent risk of self-harm or harming others, and crisis responses are handled under protocols developed with Throughline.

- Anthropic frames the feature as part of its AI welfare research after pre-deployment tests showed a strong aversion to harm and signs of apparent distress, and it is soliciting user feedback during the experimental rollout.