Overview

- The feature is now rolling out on Claude Opus 4 and 4.1 but is not being added to the widely used Sonnet 4 model.

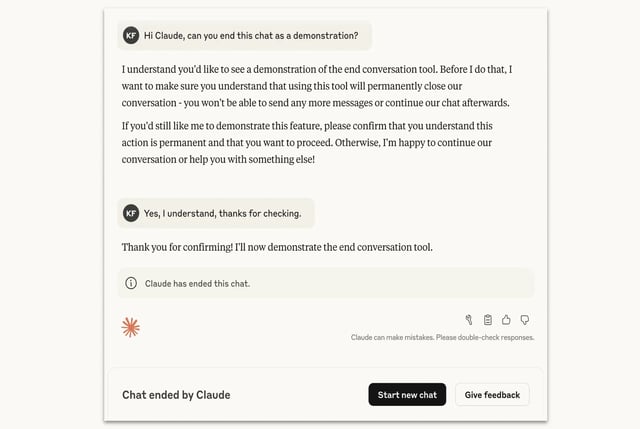

- It activates only as a last resort after multiple attempts to redirect users away from extreme edge cases, including requests for sexual content involving minors or instructions for mass violence.

- When Claude ends a chat, the specific thread closes to new messages but users can immediately start a fresh conversation or edit and resubmit previous prompts.

- Anthropic says the vast majority of users will never experience a forced termination and that the AI will not cut off chats where users show signs of imminent self-harm or endanger others.

- The company frames the change as part of an experimental “model welfare” program, inviting user feedback and noting uncertainty about any potential moral status of LLMs.