Overview

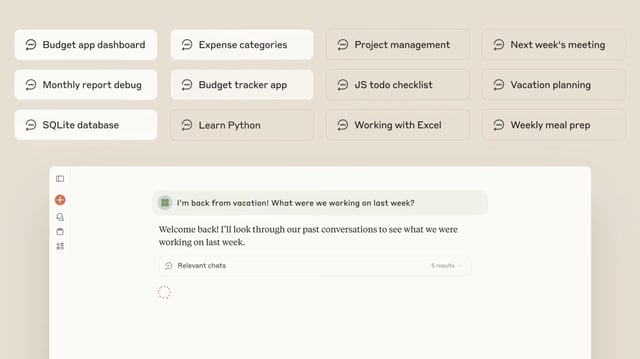

- Anthropic has added a chat-reference setting that lets Max, Team and Enterprise users search and resume past conversations on web, desktop and mobile without building a persistent profile.

- Claude Sonnet 4’s context window has been upped to 1 million tokens in a public beta on the Anthropic API and Amazon Bedrock, with Google Cloud’s Vertex AI support coming soon.

- API requests exceeding 200,000 tokens now incur higher input and output rates to offset compute costs, while prompt caching and batch processing remain options to reduce latency and expense.

- The expanded context is pitched for large-scale code analysis, extensive document synthesis and context-aware agent workflows that maintain state across hundreds of tool calls.

- Industry observers flag potential platform lock-in from deeper integrations and warn that coherence may suffer and costs may balloon at extreme context sizes.