Overview

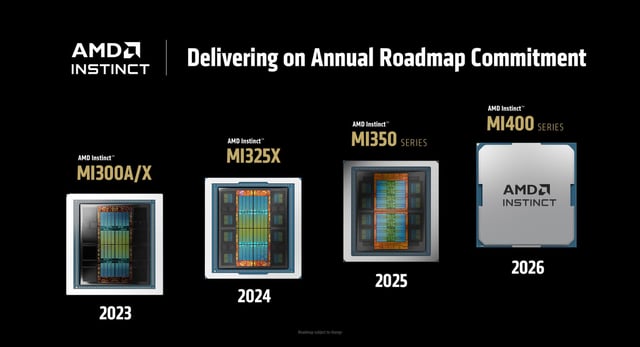

- The Instinct MI350 Series delivers four times the AI compute power and a 35-fold generational leap in inferencing over its predecessor.

- AMD previewed the Instinct MI400 Series—which it says achieves up to 10× the MI300X’s performance—and plans to integrate it into the Helios rack-scale system in 2026.

- Helios will feature open networking standards such as UALink and OCP compliance to rival Nvidia’s proprietary NVLink-powered NVL72 racks.

- The upgraded ROCm7 software stack offers enhanced compatibility with industry-standard AI frameworks and improved performance for training and inference workloads.

- AMD projects strong double-digit AI chip growth despite export controls and has secured deployments with OpenAI, Meta, Oracle, xAI and Cohere.