Overview

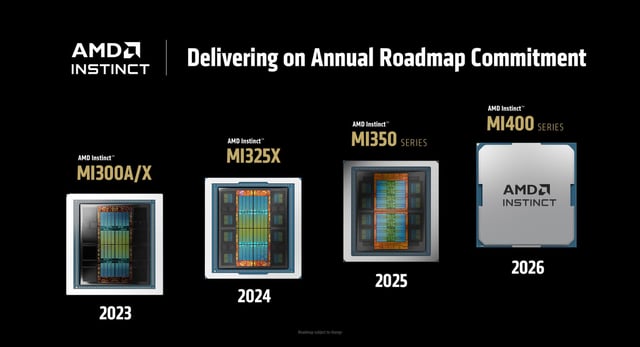

- AMD introduced its Instinct MI350X and MI355X GPUs at its Advancing AI event, citing up to 3× gains in training performance and a 35× jump in inferencing versus the MI300X.

- The liquid-cooled MI355X features 288 GB of HBM3E memory, 8 TB/s bandwidth and a 1.4 kW board power envelope, matching or exceeding Nvidia Blackwell chips on key FP4 and training benchmarks.

- The ROCm7 software stack now delivers native support for major AI frameworks, expanded hardware compatibility and new development tools to boost performance on AMD accelerators.

- OpenAI, Meta, Oracle and Microsoft have begun deploying MI350-series GPUs in their AI clusters, with OpenAI’s Sam Altman confirming plans to use the chips for ChatGPT workloads.

- AMD plans to launch Helios rack-scale servers in 2026, integrating 72 MI400-series GPUs and open UALink networking standards to rival Nvidia’s proprietary rack designs.