Overview

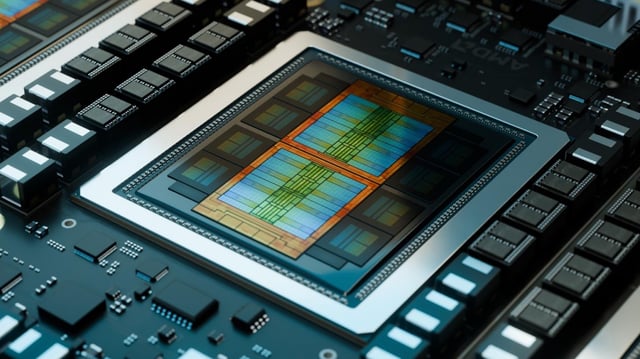

- The MI350X and MI355X leverage TSMC’s N3P process and a redesigned chiplet package to deliver up to 4.2 times faster AI inference than their MI300X predecessors while improving power efficiency.

- The MI355X variant is engineered for high-density racks with direct liquid cooling and can accommodate up to 128 GPUs at up to 1.4 kW per unit.

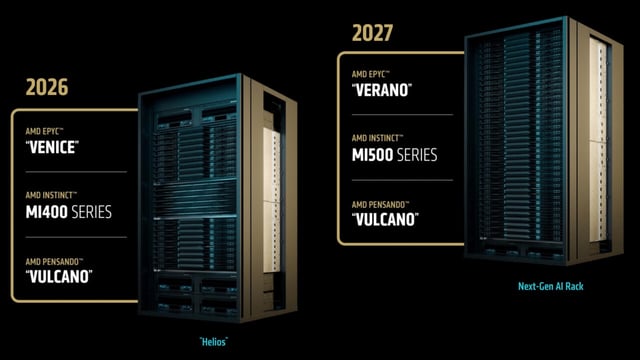

- AMD revealed plans for its Helios rack-scale architecture for next year’s MI400 accelerators, designed for both scale-up and scale-out configurations using open UBB standards.

- The forthcoming MI400 GPUs are projected to double FP4 throughput over the MI355X and integrate 432 GB of HBM4 memory with enhanced per-GPU bandwidth.

- Beyond 2025, AMD’s roadmap extends to 2027 with the Instinct MI500 series and Epyc Verano processors aimed at expanding its market share against Nvidia.