Overview

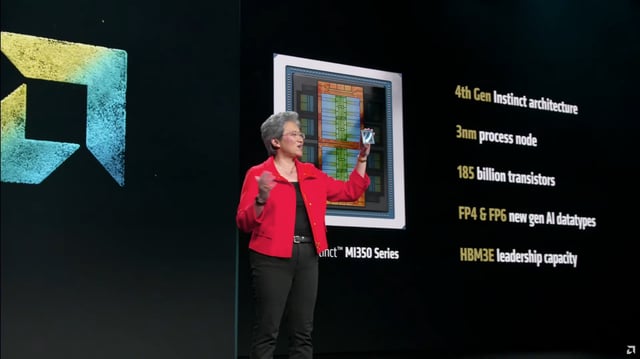

- The MI350X and MI355X pack 185 billion transistors and up to 288 GB of HBM3E memory on TSMC’s 3nm node for enhanced throughput and efficiency.

- AMD reports a fourfold boost in AI compute performance and a 35× leap in inference speed over the MI300X, enabled by new FP4 and FP6 data-type support and up to 20 PFLOPs of low-precision compute.

- The MI350X is optimized for air-cooled deployments with lower board power, while the liquid-cooled MI355X peaks at 1,400 W and offers 8 TB/s of memory bandwidth.

- Benchmarks from AMD show up to 1.3× faster inference and up to 1.1× training gains against Nvidia’s B200 and GB200 accelerators in key AI workloads.

- The MI350 series will ship through OEM partners starting in Q3 2025 and paves the way for AMD’s next-generation MI400 GPUs slated for a 2026 debut.