Overview

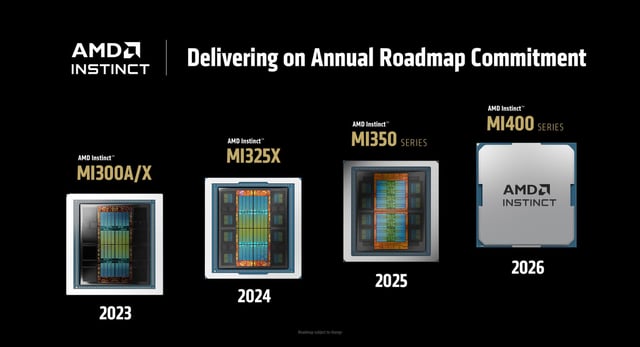

- The MI350X and MI355X deliver up to four times more AI compute performance and 35 times faster inference than the MI300X series.

- Built on CDNA4 architecture with TSMC’s 3nm process, the GPUs feature up to 288GB of HBM3E memory and 8 TB/s of memory bandwidth.

- AMD asserts the MI355X outpaces Nvidia’s Blackwell B200 in key AI tests such as Llama 3.1 405B inference by up to 1.3×.

- Oracle Cloud Infrastructure, Meta and Microsoft have begun deploying MI350 GPUs for AI training and inference in their data centers.

- AMD has acquired 25 AI-focused companies in the past year to bolster its chip ecosystem and enhance the ROCm software stack.