Overview

- Alibaba scrapped its hybrid thinking toggle after community feedback and benchmark results showed lower performance compared with separate modes.

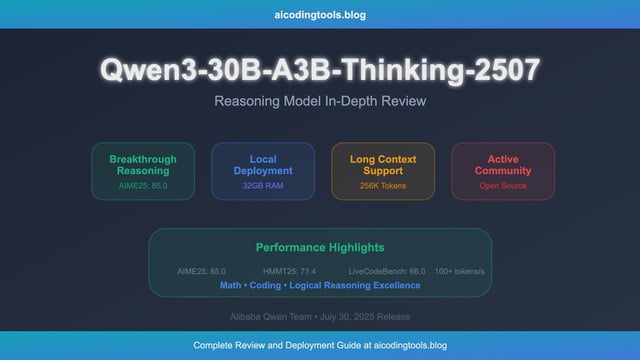

- The Qwen3-2507 lineup introduces distinct Instruct and Thinking variants for both 235B and 30B parameter models, each labeled with the “-2507” suffix.

- Instruct-tuned Qwen3-235B-A22B-Instruct-2507 delivered a 2.8x lead over its April counterpart on the AIME25 math benchmark.

- Thinking-tuned models posted 13%–54% improvements on AIME25, highlighting enhanced long-form reasoning under heavier workloads.

- Alibaba released these variants under an Apache 2.0 license with BF16 and FP8 support, and the Qwen team has signaled continued hybrid research alongside an upcoming code-tuned 30B MoE.