Overview

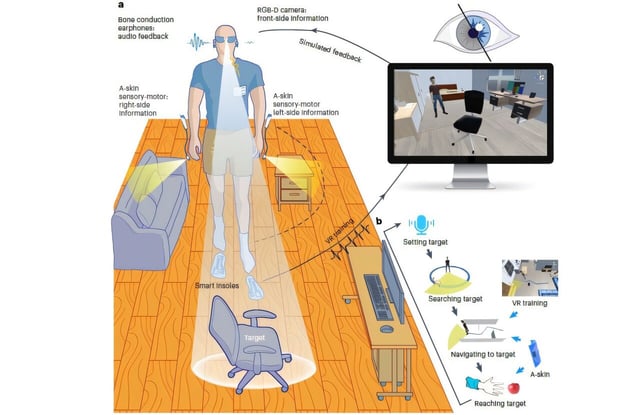

- The wearable device uses AI to process live video from a camera mounted on glasses, identifying obstacles and guiding users with audio and haptic feedback.

- Participants in trials experienced a 25% improvement in navigation performance, including walking distance and time, compared to conventional canes.

- The system integrates multimodal feedback, delivering audio cues every 250 milliseconds and vibrational alerts through artificial skin patches for obstacle detection.

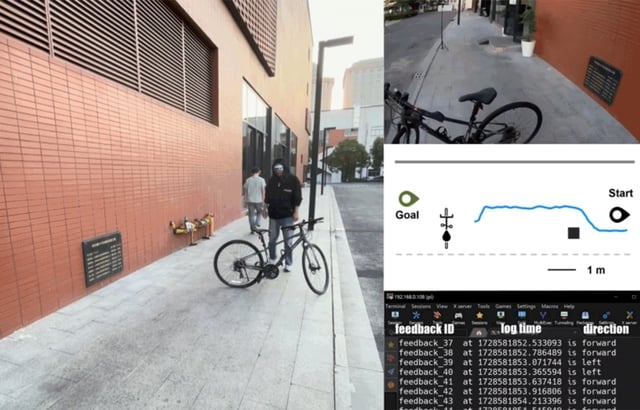

- Testing included indoor maze trials and real-world scenarios, such as navigating city streets and cluttered environments, with successful results.

- Researchers emphasize the need for further refinements to ensure the prototype's safety, reliability, and practicality for daily use.